What is Robots.txt?

How to check Robots.txt

This is a text file that instructs web robots (search engines) on how to access your website. With this file you can better control how bots access and index pages on your website.

However, it is important to clarify that it is not a way from preventing search engines from crawling your site. To keep a web page out of SERPs, you should use noindex tags or directives.

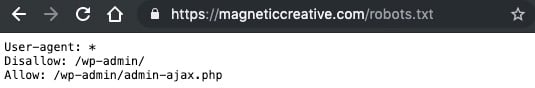

Robots.txt is accessible by typing in '/robots.txt' after the domain in your browser

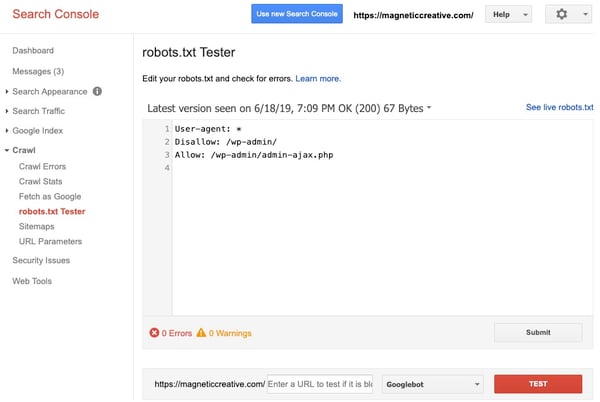

You can also use Google Search Console

Navigate to Crawl > Robots.txt tester

Despite the use of the terms "allow" and "disallow", the directives are purely advisory and rely on the compliance of the web robot.